You already know SEO. Now meet its tougher, faster cousin: GEO (Generative Engine Optimization).

Instead of begging for a blue-link ranking, you engineer your content so AI systems choose you then quote, cite, and summarize you inside their answers. If you don’t adapt, your brand gets edited out of the conversation.

Here’s the punchline up front: GEO is how you make your pages easy for large language models and AI search layers to retrieve, trust, and reuse.

It demands cleaner structure, credible signals, and content that reads like an answer key. The table below shows what’s changing and why it matters.

These contrasts will orient you before we dive deeper. Skim the rows, spot where your current content falls short, and you’ll see exactly what to fix first.

Key Takeaways

- Generative Engine Optimization is about structuring and signaling your content so AI systems select, cite, and summarize you inside their answers.

- Clear “What/How/Why/Do” sections, tables, FAQs, and schema make your pages machine-digestible boosting inclusion in AI overviews, chat answers, and research tools.

- E-E-A-T, credible citations, and high-authority backlinks increase your odds of being pulled into LLM responses. Brand mentions and topical depth act like multipliers.

- Track inclusion rate (how often you appear in AI outputs), citation share, and answer coverage, not just rankings and CTR.

- GEO layers on top of SEO. You’ll keep your technical and link fundamentals, but prioritize answer-ready content and sourceability to win the AI layer.

What is GEO?

GEO or Generative Engine Optimization means structuring and signaling your content so AI answer engines (ChatGPT, Gemini, Perplexity, AI Overviews) select, cite, and summarize you inside their responses. It’s SEO’s next layer meaning different surface, same objective: win attention.

GEO focuses on inclusion inside answers, not just blue-link rankings. The practical shift: you optimize for retrieval systems and LLMs that synthesize multi-source outputs.

That demands answer-ready structure (definitions, steps, tables), explicit credibility signals (author, dates, citations), and semantic depth so models can “understand” and reuse your content accurately.

The short table below clarifies where GEO sits in your growth stack and how it changes your content playbook.

| Concept | One-line meaning | Who “consumes” it | Primary KPI |

| SEO | Optimize to rank links on SERPs | Crawlers, searchers | Rank, CTR, organic sessions |

| GEO | Optimize to be used in AI answers | Retrievers + LLMs + users | Inclusion rate, citation share, answer coverage |

Why this matters now: AI layers are absorbing intent and issuing answers first; if your pages aren’t “answer-ready,” competitors will occupy those citations. That’s the silent leak most teams miss.

Fast Context: What GEO Optimizes For (and Where It Shows Up)

- Answer engines: ChatGPT, Perplexity, Gemini, Copilot, Google AI Overviews.

- Surfaces: AI overviews, chat panels, research cards, inline citations.

- Signals: structure (H2/H3, lists, tables), verifiability (sources, dates, bylines), entity clarity, freshness, and recognized authority.

What Makes GEO Different?

SEO fights for position; GEO fights for presence inside the answer. You’re no longer chasing only rank, you’re chasing reuse.

That flips how you outline, source, and publish. Let’s break it down with specifics you can ship this week.

- Target surface = AI answers

- Selection mechanics = retrieval + synthesis

- Measurement = inclusion & citations

- Track how often your brand appears in answer panels and which pages get cited.

- Expect volatility as engines evolve so monitor monthly.

- Track how often your brand appears in answer panels and which pages get cited.

Quick Reality Check: GEO Is Rising (and Being Debated)

Generative engines are reshaping discovery and yes, there’s active debate about bias and manipulability. But ignoring the channel won’t stop it.

Smart teams adapt: make content more verifiable, more structured, and more findable by machines and humans.

Where This Fits in Your Strategy

- Create a definition block (“What/Why/How/Do”) at the top of key pages.

- Add FAQ and HowTo schema; include author, date, and citations.

- Refresh evergreen pages quarterly with new data and references.

- Monitor AI answer inclusion alongside rankings and CTR.

Tip: Authority still compounds. Building trustworthy references and strong link equity increases your odds of being “the chosen source” in AI answers so pair GEO with robust, ethical link earning for compounding effects.

How GEO Works

Generative engines fetch sources, score credibility, then synthesize an answer. If your page isn’t easy to retrieve, parse, and cite, you won’t appear no matter how well you “rank.”

GEO forces you to engineer structure, signals, and authority so AI systems can reliably choose you. Ready to see the moving parts?

Generative engines like AI Overviews, Perplexity, ChatGPT, and Gemini blend retrieval with large language models.

First, they retrieve candidate pages; next, they generate a response using what they trust most. Your job is to become maximally retrievable (found), interpretable (understood), and citable (trusted) in that pipeline.

Think of it as building a content “API” for AI: clear sections, verified facts, and machine-readable context.

The GEO Pipeline (From Query to Citation)

Below is a practical map of what happens when a user asks an AI system a question. This will help you decide where to invest effort first.

| Stage | What the Engine Does | What You Must Do to Qualify |

| Retrieval | Finds candidate pages via semantic search, indices, and sources it has learned to trust | Publish answer-ready sections, use entities/keywords naturally, interlink related topics, and keep pages up-to-date |

| Scoring | Weighs credibility, freshness, authority, clarity, and “sourceability” | Add bylines, dates, references; earn high-quality mentions/links; structure data with schema |

| Synthesis | LLM composes a response from selected sources | Provide succinct definitions, steps, tables, and FAQs that are easy to quote |

| Attribution | Engine decides whether/what to cite | Make facts verifiable, include explicit claims with sources, and use stable URLs/anchors |

Most public guides and vendor playbooks align on this flow: optimize content so answer engines can understand, surface, and present it accurately. This is an evolution of SEO as answers move from links to AI summaries.

What Signals Do Generative Engines Trust?

Structured clarity + verifiability + recognized authority. Those three pillars increase your odds of selection and citation across AI surfaces. Let’s break them down.

Structured Clarity (Format) – Engines prioritize pages that look like answer keys: H2/H3 blocks, tight definitions, numbered steps, short paragraphs, comparison tables, and FAQ sections.

This is specifically recommended in multiple GEO playbooks because it makes passages extractable and reduces hallucination risk during synthesis.

Verifiability (Source Hygiene) – Citations, dates, authorship, and outbound references make it safer for engines to rely on your content.

Clear provenance signals are repeatedly cited in practitioner content and platform explainers as factors that improve inclusion.

Recognized Authority (Off-page & On-site) – Engines tend to lean on sources with topical credibility and consistent coverage.

That means subject-matter depth, internal topical clusters, and external authority (mentions/links/brand). Recent GEO primers emphasize this overlap with classic SEO while reframing the surface as answer inclusion, not just rankings.

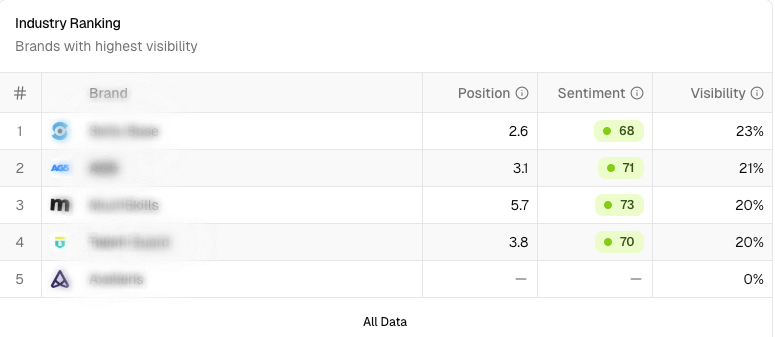

How To Measure GEO

Stop obsessing over positions; measure how often AI answers use and credit you. Track inclusion, citation share, and answer coverage across sampled prompts then iterate.

Want the quickest way to see if GEO is working? Watch your brand show up inside answers, not just under them.

Traditional SEO dashboards miss GEO’s real signal: presence inside the answer layer. Start by defining a representative prompt set for each topic cluster, including head, mid, and tail queries phrased conversationally.

Run them on the AI surfaces that matter to your audience and record two outcomes: whether your domain appears in the composed answer and whether you’re explicitly cited. From there, create a monthly baseline and compare clusters.

You’ll likely notice two patterns: (1) pages with crisp definitions and sources get picked more often; (2) recency and entity clarity nudge engines toward your content when multiple sources say the same thing.

Resist the urge to track everything daily. Instead, monitor monthly, investigate swings, and ship improvements.

Refresh outdated sections, add source hygiene where claims are thin, strengthen internal links to clarify entity relationships, and expand high-performing clusters with adjacent prompts.

Over two to three cycles, expect clearer lift in inclusion and citations than in classic rankings alone.

| Metric | What It Actually Means | Action When Weak |

| Inclusion Rate | % of prompts where your domain appears inside AI answers | Tighten definition blocks; improve entity clarity and structure |

| Citation Share | % of cited sources that are yours vs. competitors | Add provenance, date stamps, and external references near claims |

| Answer Coverage | Topics/clusters where you’re selected consistently | Expand winning clusters; create adjacent pages with similar patterns |

| Freshness Lag | Time since last update on pages losing inclusion | Refresh data, examples, and sources; republish with change logs |

When you’re rolling this across many product lines or markets, formalize governance: locked prompt sets, monthly scorecards, and an owner per cluster.

Scale efforts by pairing GEO content patterns with authority building and technical standards then, assign cluster leads to ship quarterly refreshes.

Do GEO – Playbooks You Can Deploy This Week

Treat your site like an answer API. Engineer pages so AI systems can quickly retrieve, parse, and safely cite you. That means answer-first structure, schema, explicit provenance, and authority signals baked into every publish.

GEO isn’t a trick; it’s a publishing standard for AI-overview, chat, and research surfaces. Industry guides converge on the same core: make your content findable by retrieval, understandable to LLMs, and worth citing in the final answer.

When you ship pages with tight definitions, crisp steps, tables, and verifiable sources, you increase inclusion in AI responses across ChatGPT, Perplexity, Gemini, Copilot, and Google’s AI Overviews.

Multiple recent primers outline this shift, emphasizing structure, clarity, freshness, and credibility as the winning signals for generative engines.

Ship Answer-First Structure (Definition → Steps → Table → FAQ)

Put the answer at the top in two sentences, then break the solution into steps, a comparison table, and an FAQ. This “answer-first” scaffold maps to how retrieval and synthesis work so your content becomes easy to quote and safe to cite.

The engines pull passages, not just pages. When your intro block states the definition and outcome up front, retrieval gets a high-quality extract.

When you follow with numbered steps, the LLM can assemble a coherent how-to. When you include a table, it can contrast options without hallucinating. When you add an FAQ, it can handle adjacent questions within the same session.

Major GEO playbooks and platform explainers recommend scannable headings, clear Q&A, and tabular summaries specifically because they reduce ambiguity when LLMs compose.

Over time, these patterns help your domain become “the safe pick” for synthesis and citation.

Quick scaffold you can copy this week:

- 2–3 sentence definition with outcome

- 5–7 numbered steps (inputs, action, expected result)

- 1 comparison table (methods, tradeoffs, when to use)

- 6–8 FAQ entries answering conversational prompts

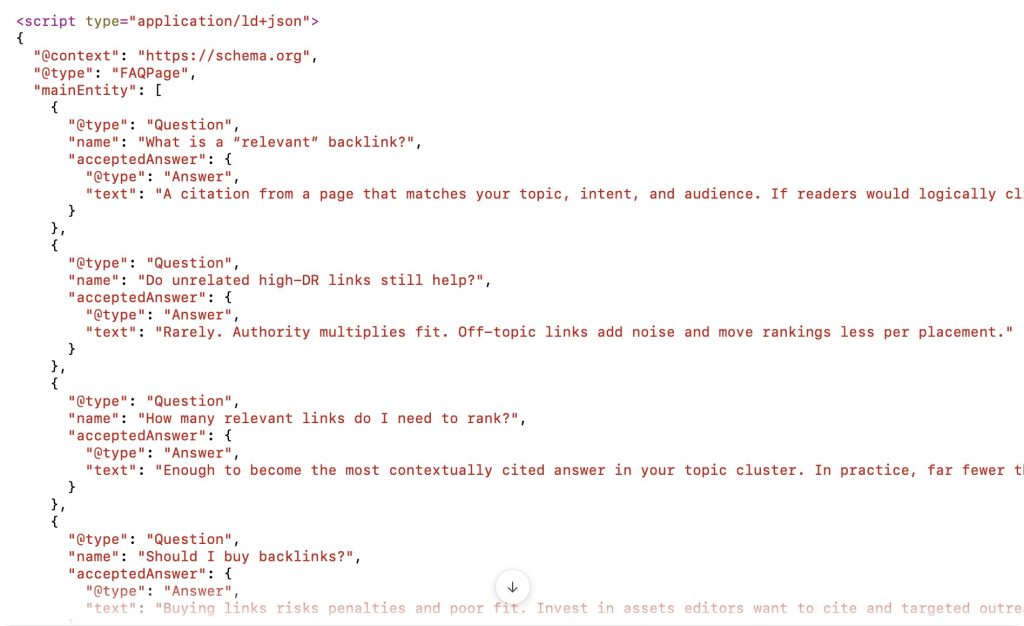

Add Schema & Provenance (Make Meaning and Sources Machine-Visible)

Mark up content with JSON-LD (Article, FAQPage, HowTo) and pair claims with dates, bylines, and outbound citations. This clarifies intent for machines and gives engines safe anchors for attribution.

Schema tells retrieval systems what your sections are, not just what they say. FAQPage signals direct Q→A pairs; HowTo flags procedural steps; Article with author and date communicates ownership and freshness.

Place source links near claims (not buried) so models can lift a passage and keep its citation. Several GEO guides call this “source hygiene” which is the difference between being used and being credited.

When two pages state the same fact, engines tend to pick the one with clearer provenance and recent updates.

Tighten this loop by adding change logs (“Updated September 2025”) and versioned assets (“2025 Report”) that make freshness unambiguous.

Reference table (start here):

| Content Type | Schema to Add | Proof Signals to Include |

| Definitions & Primers | Article, BreadcrumbList | Author, last updated date, outbound sources |

| How-to Guides | HowTo | Step counts, tools required, risks/cautions with sources |

| FAQs | FAQPage | Short answers (<50 words), source link per claim |

| Product/Feature Pages | Product, Review (if applicable) | Specs with citations, version/date, comparisons |

Optimize for Entities & Clusters (Retrieval Hooks Everywhere)

Name entities precisely (brands, models, frameworks) in headings and early sentences, then cluster related topics into internal link hubs.

Retrieval needs hooks; clusters teach engines you’re a consistent authority in that slice. Want the fastest way to implement?

Generative engines blend semantic search with synthesis. If your pages use ambiguous phrasing or scatter related topics across silos, retrieval may miss you.

Build a “pillar → cluster” model: one comprehensive hub page that defines the topic, supported by subpages targeting adjacent intents (comparisons, checklists, mistakes, pricing, implementation).

Interlink with descriptive anchors so models can navigate context. Foundation and other practitioner sources emphasize that clustered coverage plus entity clarity increases your chances of being selected across multiple, related prompts.

GEO vs SEO: Differences, Overlaps & Integration

Bottom line: SEO ranks blue links; GEO earns presence inside the answer. Treat GEO as an AI-layer extension of SEO, not a replacement.

Key differences:

| Dimension | SEO | GEO |

| Objective | Rank URLs on SERPs | Be selected/cited in AI answers |

| Optimized For | Queries & snippets | Retrieval, synthesis, attribution |

| Core Signals | Links, on-page, page speed | Structure, provenance, recency, authority |

| Primary KPIs | Rank, CTR, sessions | Inclusion %, citation share, answer coverage |

Where they overlap: Authority, topical depth, and technical quality power both. Strong brands with structured, source-backed content win in SERPs and AI layers.

How to integrate fast: Keep your SEO fundamentals, then add GEO patterns: definition-first intros, tables/FAQs, JSON-LD (FAQ/HowTo/Article), visible citations, and quarterly refreshes. This is the practical bridge from rankings to answer inclusion.

Challenges, Risks & Critiques of GEO

GEO is powerful but messy. Engines are black boxes, big brands often get preference, citations can misfire, and tactics shift fast.

Treat GEO as an experimentation program with ethical guardrails and constant refreshes, not a set-and-forget checklist.

Generative engines don’t explain precisely why they pick sources. Retrieval pipelines and LLM synthesis introduce uncertainty: you can be used without being credited, or outranked by a fresher, louder, or better-structured rival.

Newsrooms and researchers also warn that “GEO gaming” can bias answers toward superficially optimized content raising trust and misinformation concerns.

The takeaway: optimize for clarity and provenance, not manipulation. Expect volatility and measure inclusion monthly.

| Risk | Why It Happens | Practical Countermeasure |

| Black-box selection | Retrieval + LLMs weigh opaque signals; selection varies by prompt & surface | Publish answer-first blocks, schema, and citations to reduce ambiguity; monitor inclusion by cluster |

| Big-brand bias | Engines lean on widely cited domains as “safe picks” | Build topical depth + authoritative references; earn credible mentions beyond your site |

| Misattribution / “used but not cited” | Synthesis blends multiple passages; weaker provenance loses credit | Put claims + sources together; add dates, named authors, anchor links |

| Volatility / updates | Models, indices, and policies change; freshness is a tie-breaker | Quarterly refreshes with new data and change logs; republish dates |

| Manipulation risk | Over-optimization can game outputs and degrade quality | Prioritize accuracy, citations, and user benefit; avoid coercive prompt-bait patterns |

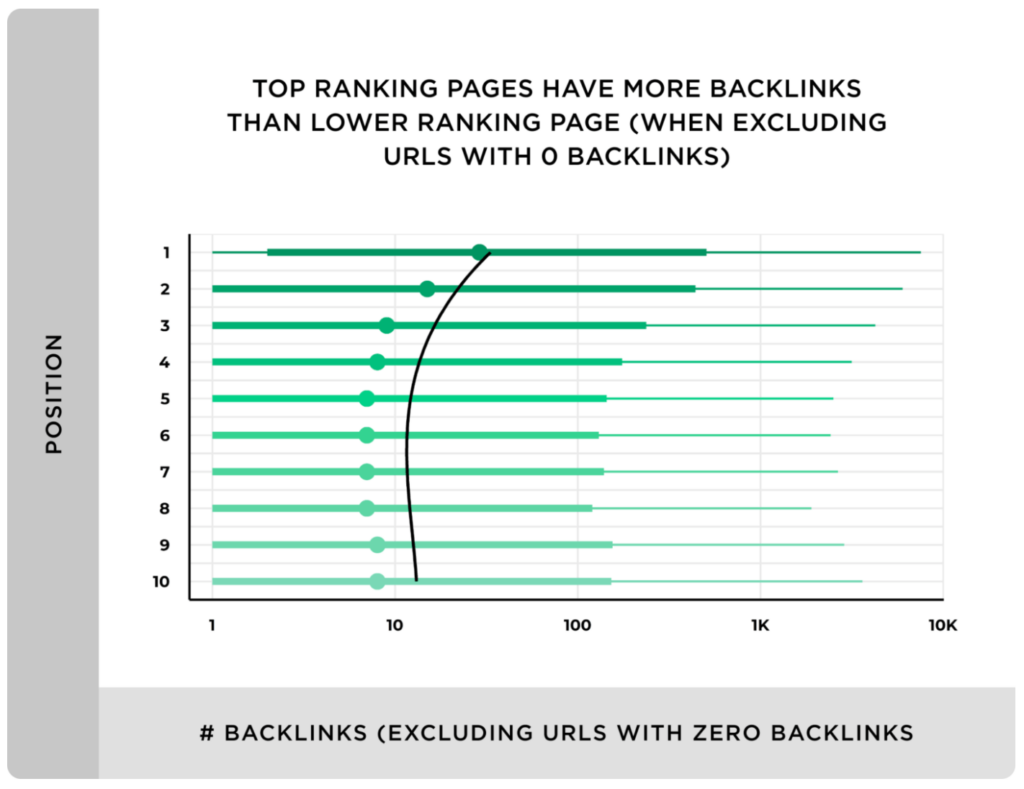

Two implications follow. First, authority compounds: domains with strong off-site signals tend to win ties in AI layers, just as they do in classic SERPs so keep investing in reputable mentions and link equity alongside GEO formatting.

Second, recency and clarity decide close calls: when multiple sources say the same thing, engines often favor fresher, better-structured content with explicit provenance.

If you need to de-risk at scale, fold GEO into a broader governance model: one owner per topic cluster, fixed prompt sets for auditing, monthly inclusion reviews, and a refresh calendar for top assets.

For complex stacks (multi-product or multi-region), align with specialists who can operationalize updates and authority building in tandem.

Start with ethical authority programs and sector-specific playbooks that reinforce credibility in AI answers.

Conclusion

GEO isn’t a tactic; it’s a publishing standard for the AI layer. The brands that get selected, cited, and summarized inside answers will capture trust and demand before a click ever happens.

If your content isn’t engineered for retrieval, synthesis, and attribution, you’re invisible where decisions now start.

Treat every key page like an answer API: definition-first intros, crisp steps, verifiable claims, structured data, and quarterly refreshes.

Pair that with durable authority such s credible mentions and earned links so engines see you as the safe source when multiple pages say the same thing.

Implementation is simple, but it’s a discipline: structure, provenance, authority, measurement.

FAQ – What is GEO

What is GEO in one sentence?

Generative Engine Optimization makes your content easy for AI systems to retrieve, trust, and cite inside their answers so you earn visibility where users increasingly get decisions made.

How is GEO different from SEO?

SEO ranks links on SERPs; GEO earns presence and citation inside AI-generated answers. You keep SEO fundamentals but add answer-first structure, schema, provenance, and inclusion tracking.

Do I need to replace SEO with GEO?

No. GEO extends SEO. Keep technical health, speed, and link equity; add structured answers, citations, and refresh cadence to win the AI layer alongside classic rankings.

What’s the first page I should optimize?

Pick a high-intent, already-ranking page. Add a 2–3 sentence definition, numbered steps, a comparison table, and a 6–8 question FAQ with sources. Implement Article/FAQ/HowTo schema.

Which metrics prove GEO is working?

Track inclusion rate (appearance inside answers), citation share (credit vs. competitors), and answer coverage (clusters you win). Expect monthly trends, not daily precision.

Does domain authority still matter?

Yes, especially as a tie-breaker. Engines prefer safer sources when multiple pages say the same thing. Credible mentions and high-quality backlinks increase citation probability.

How often should I refresh content?

Quarterly for priority pages. Update stats, examples, and references; add a visible “Updated” date and change log to improve selection when engines weigh freshness.

Can smaller brands win at GEO?

Absolutely. Structure, provenance, and topical depth can beat bigger names on specific queries. Niche clusters + consistent updates + credible references narrow the brand gap fast.